We Have the Technology to Read... Minds?!

A research group from the Kyushu Institute of Technology have announced that they have successfully read certain words and letters from people’s minds without them saying anything.

By SoraNews24

https://upload.wikimedia.org/wikipedia/commons/3/3f/%DA%86%D9%87%D8%A7%D8%B1_%D9%81%D8%B5%D9%84.png

The study was carried out by a team led by Professor Toshimasa Yamazaki from the Systems Engineering Department of the Kyushu Institute of Technology. In it they gathered a few dozen men and women and analyzed their brainwaves when saying the Japanese words for the game rock (gu), paper (pa), scissors (choki).

They then asked the subjects to simply think about the word strongly and measured their brainwaves again. When comparing the two they found similar patterns both times. Although the words generated the same waves when spoken or thought, they were different for each person.

They also expanded the tests to include the Japanese words for summer (natsu), winter (fuyu), spring (haru) and autumn (aki), and from two of those words they could isolate the brainwave patterns for the Japanese letters ha, ru, na and tsu with 80 to 90 percent accuracy.

http://en.rocketnews24.com/2016/01/07/researchers-use-uniformity-of-japanese-language-to-read-peoples-minds/

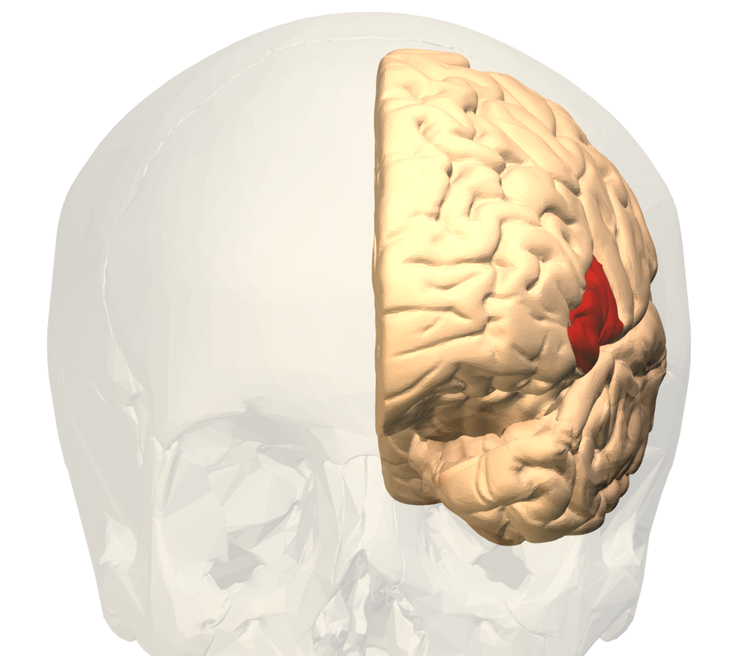

Professor Yamazaki and his group focused their study on the part of the brain known as Broca’s area (in red), which is associated with human speech. According to the professor, Broca’s area triggers a signal from the brain called a “readiness potential,” then prepares the muscles needed to utter the word conjured in one’s mind. So, any time you think of a word, your brain is already working to make your body say it, even if you don’t.

This signal causes a slight disturbance in the person’s brainwaves, which can be detected by EEG. Since different sounds require different muscle combinations, their readiness potential would be unique, as would the disturbance it caused to the brainwaves, making these verbal thoughts possible to decipher.

http://en.rocketnews24.com/2016/01/07/researchers-use-uniformity-of-japanese-language-to-read-peoples-minds/

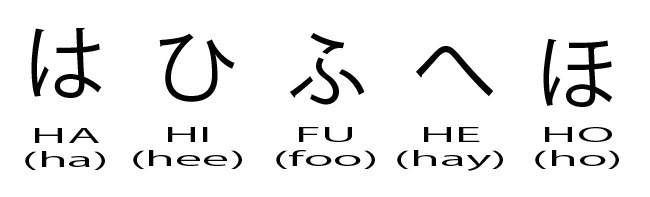

Although possible, this method is far from easy. Research into mind-reading words through brainwave analysis has been going on for decades, but is so complex that little progress has been made until now. Part of the reason Professor Yamazaki and his group has made such a breakthrough is because they’re working in Japanese: One roadblock that researchers have met in the past was that they could successfully read vowel sounds from brainwaves but could not identify those associated with consonant sounds. The Japanese language has a distinct advantage here because nearly every character has a vowel sound in it (as shown in the picture above).

In addition, the number of vowel sounds is very limited compared to other languages, making it much easier to identify and catalog each element. English, on the other hand, with its plethora of voiceless consonant sounds and wide range of vowel sounds, would be vastly more difficult to read in people’s brainwaves.

That’s why Proffessor Yamazaki is confident that Japan will lead the way in this type of mind-reading technology, and with more advancement, the benefits would be huge—like aiding in communication for people with speaking disabilities, and perhaps even patients locked in a coma or state of shock. It can also be used to allow people to interact with each other in areas where sound doesn’t travel, such as the ocean or outer space.

Related Stories:

RocketNews24’s six top tips for learning Japanese

What’s the fastest-sounding Japanese word? (Hint: it’s the noise a bullet train makes)

Panasonic develops data communication using an ordinary light and smartphone